A while ago I did a video on the PST Import Service on Microsoft 365 / Office 365. You can see it here:

Office365 Exchange Online PST Import Service

Enough has changed so that a revisit is needed.

What the PST Import Service does is allow you to bulk-copy PST files to Azure and have Microsoft import those PST files to mailboxes – primary mailbox or to the online archives – to your mailboxes. It’s a very useful service, and I’ve lost track of how many times I’ve used it over the years. It’s also very easy to use – so let’s run through the process.

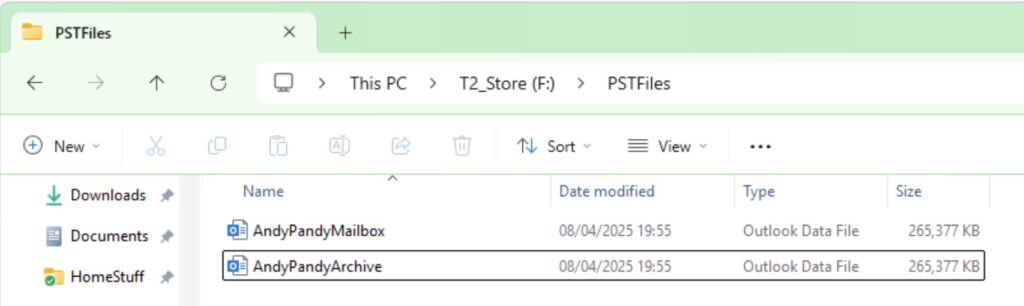

For the purposes of this demo I have a single user – Andy Pandy – and a couple of PST files. One I want land in his primary mailbox, and one I want to land in his archive:

You Need the Right Permissions!

This is a common issue when trying to use this service – the user you’re going to use for this must be assigned the Mailbox Import Export role in Exchange Online. What’s annoying is that while this is quick to add to a user, it’s slow to apply. You also need to be assigned the Mail Recipients role. By default, this role is assigned to the Organization Management and Recipient Management roles groups in Exchange Online.

I usually as an early on task create a role group that has the relevant rights – adding members to the group applies the permissions far faster than applying them to individual users.

Find the Import Service

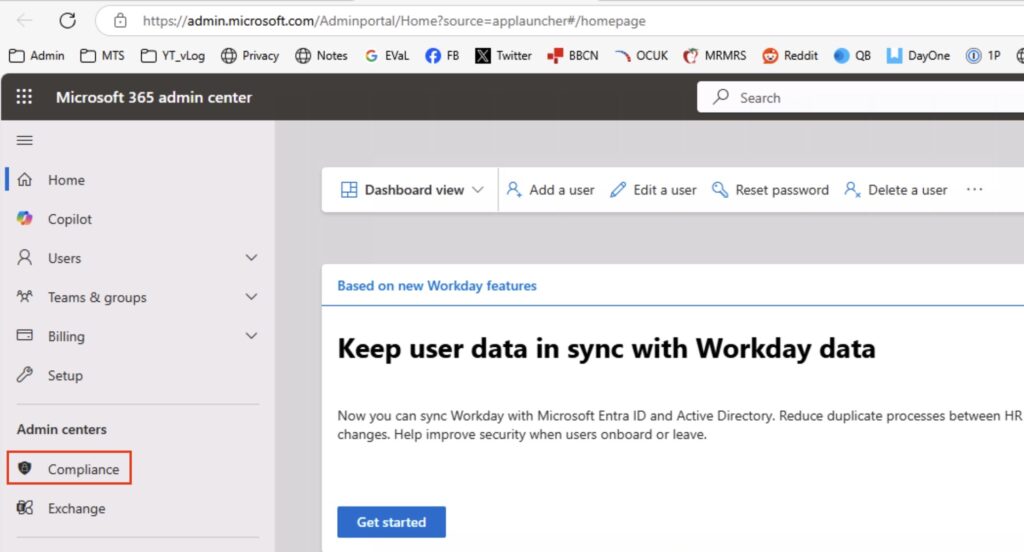

I’ll take you through the whole process. Firstly, logon to Microsoft 365, and go to the Admin Centre. You need the Compliance portal – or Purview.

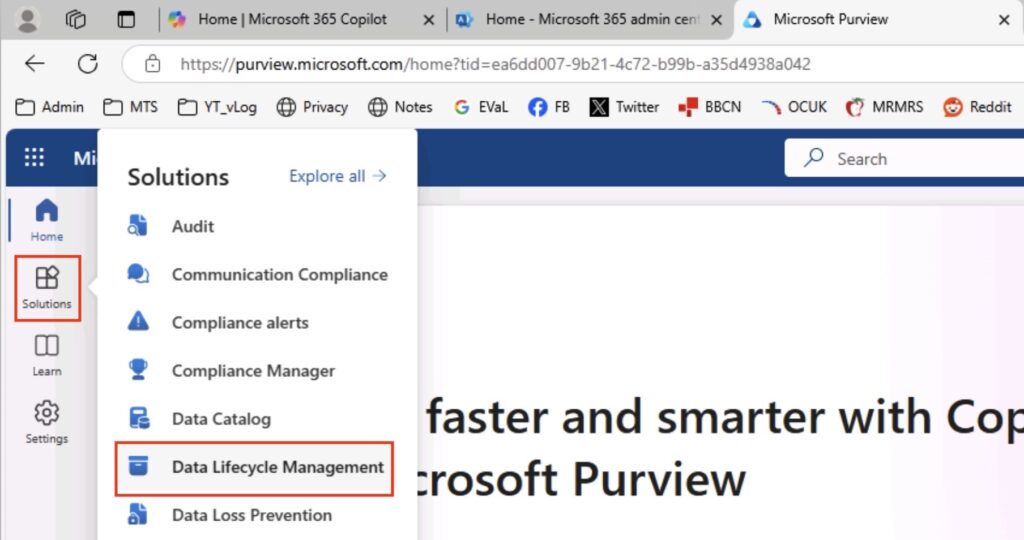

I’m going to assume that you’re using the new admin portal rather than the classic – the option is still easy to find in the classic portal however. The option you want to select is ‘Solutions’ in the left-hand menu bar, followed by ‘Data Lifecycle Management’.

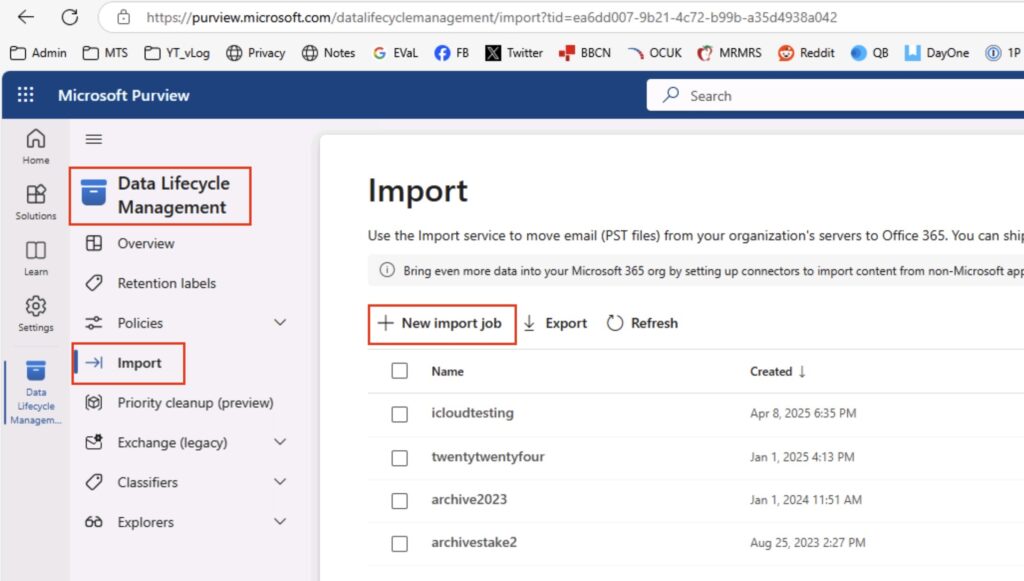

Create a new Import Job

In that area, you will now see on the left the option to select ‘Import’ – do that, and the ‘+’ next to the ‘New Import Job’ in the menu – we’re going to start an import. You’ll see that I have several jobs in there already.

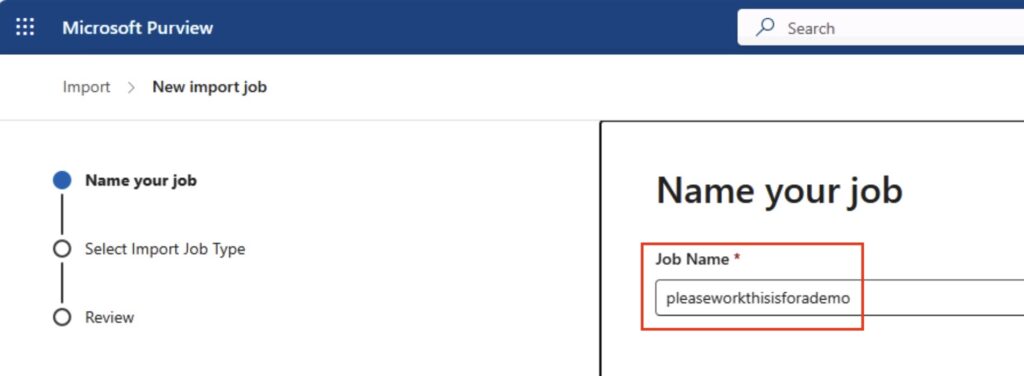

The first thing you will need to do is name your job. It’s quite fussy on the naming:

Job Name: 2-64 lowercase letters, numbers or hyphens, must start with a letter, no spaces

You can see that I have given mine a suitable demo name:

Click ‘next’ right down the bottom of the screen – be careful with this, if you can’t see it, expand your browser window! It doesn’t always auto-scale, which can be irritating.

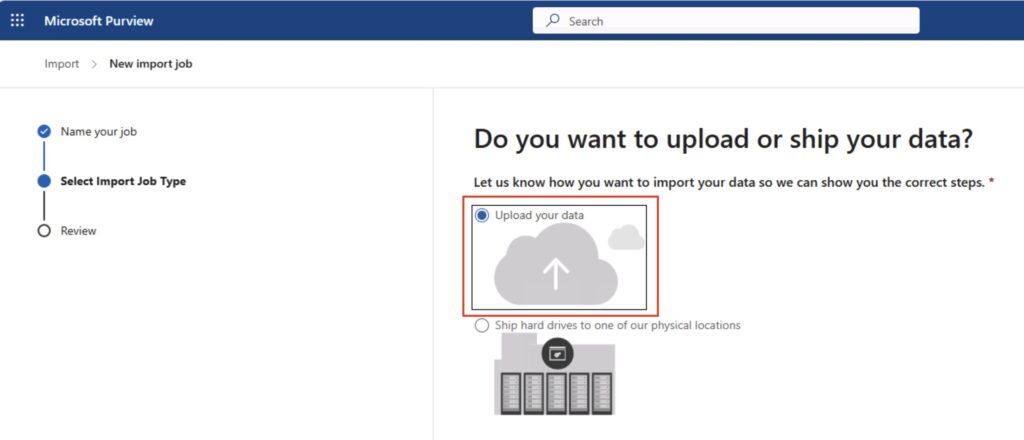

Select your import type

Next, we’re going to choose to upload our data. You can ship physical drives to Microsoft, however I’m not going to cover that process here. Select ‘Upload your data’, and click ‘next’ at the bottom.

Import Data

This is the interesting bit, and it can be quite fussy so you must be accurate with this items!

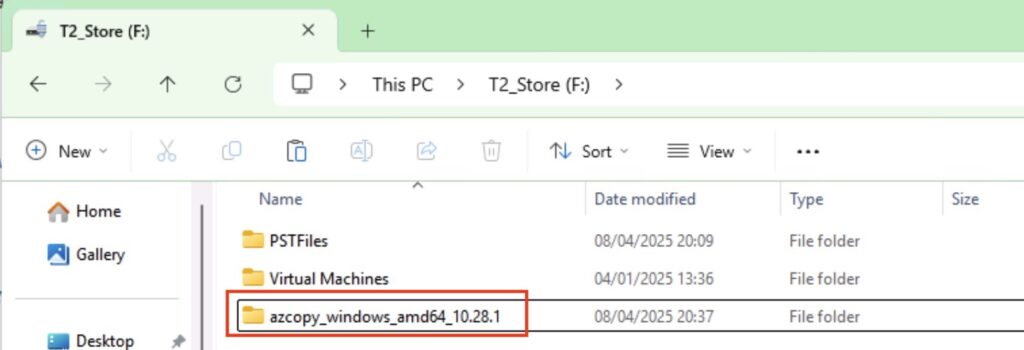

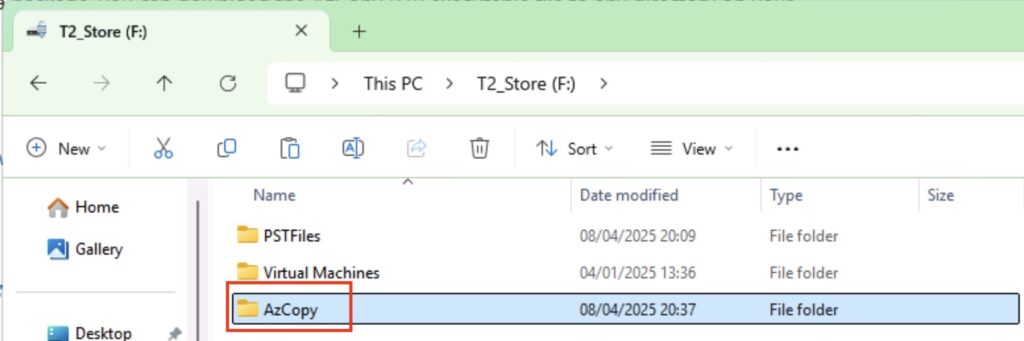

You will first need to download the ‘Azure AzCopy’ tool. You can get it here:

There are significant changes between earlier versions of AzCopy and later ones in terms of command line options, so I’m going to assume you have downloaded the latest version (as of 2025-04-08) and we’ll be using that. We’ll be doing this from the command line, so I tend to rename the directory after I’ve expanded it to make it easier – you can see below the original, and where I have renamed it:

Let’s Copy Our Data!

So let’s form our AzCopy command – fire up notepad as this may take you a couple of goes to get right ☺️ The command you want is:

AzCopy.exe copy SourceDirectory “targetblob” –overwrite=true –recursive=true

NOTE: Make sure you put the target blob in quote – it often has syntax impacting characters in it that will cause you problems.

Let’s formulate the full command. What’s my ‘SourceDirectory’ – In my example, my PST files are in ‘F:\PSTFiles‘. So my source is ‘F:\PSTFiles\*“. The format with the trailing * is important! If you just do ‘F:\PSTFiles’ then you’ll end up with a folder called ‘PSTFiles’ and a complaint saying “the service cannot find your files – “The PST could not be found in the storage account” or similar.

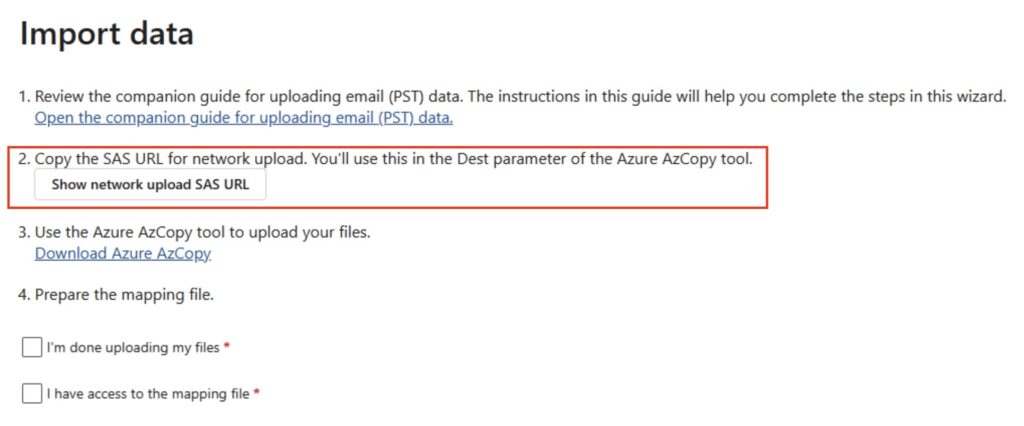

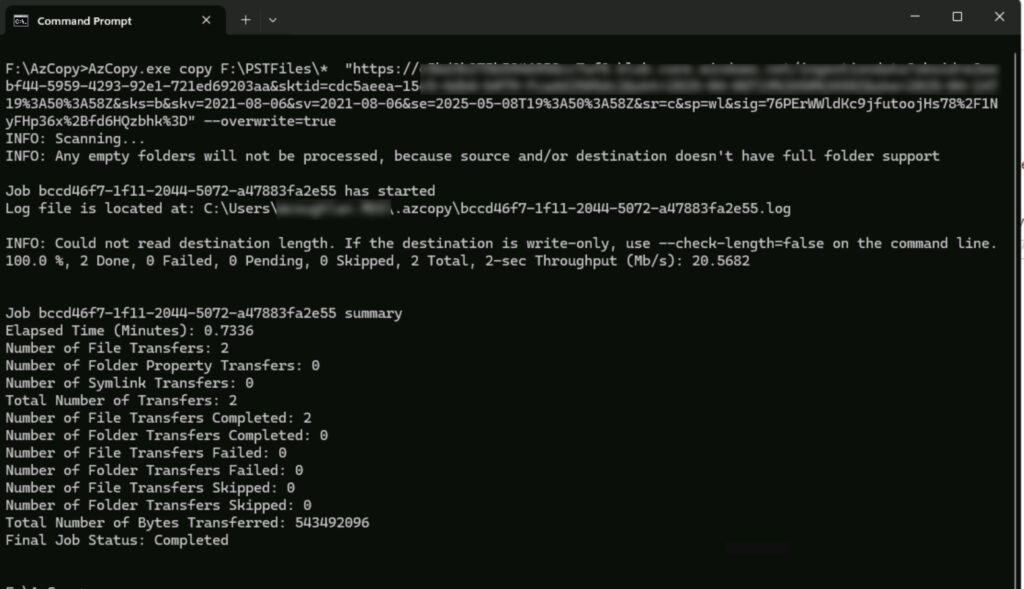

Next, the ‘TargetBlob’. You get this by clicking on the ‘Show Network Upload SAS URL’:

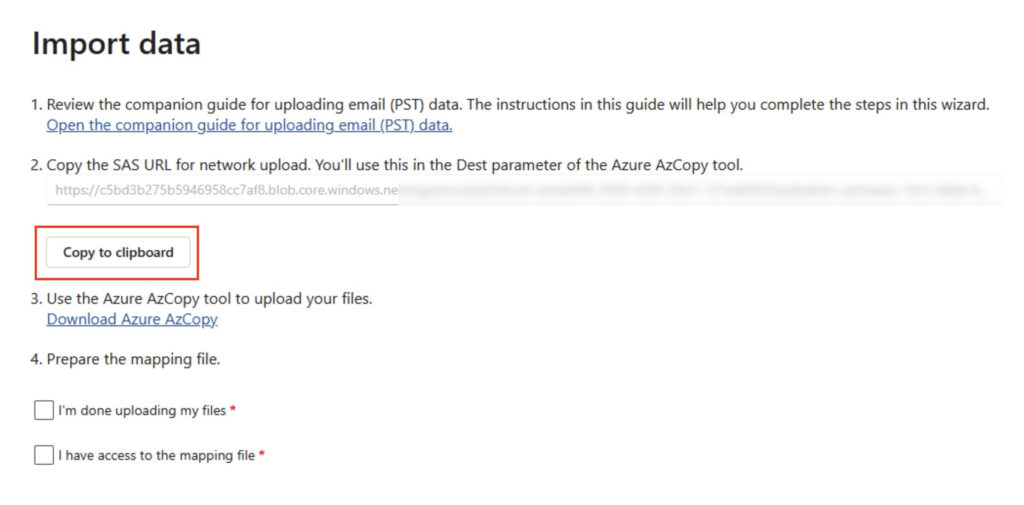

After a moment or two, the URL will be generated, and you will see the option to copy the URL to the clipboard – do that, and paste it into Notepad.

So we now have the source and the target, so let’s formulate our command based on:

AzCopy.exe copy SourceDirectory targetblob –overwrite=true –recursive=true

You should end up with something like:

AzCopy.exe copy F:\PSTFiles* https://c5bd3b275b5946958cc7af8.blob.core.windows.net/ingestiondata?skoid=e2eebf44-5959-4293-92e1-721ed69203aa&sktid=cdc5aeea-15c5-4db6-b079-fcadd2505dc2&skt=2025-04-08T19%3A50%3A5assjdh=2025-04-14T19sds%3A50%3A58Z&sks=b&skv=2021-08-06&sv=2021-08-06&se=2025-05-08T1asas9%3A50%3A58Z&sr=c&sp=wl&sig=76PErWWldKc9jfutoojHs78%2F1NyFHp36x%2Bfd6HQzbhk%3D –overwrite=true

I’ve randomised the blob, so don’t even think about it 🤓 The item on the end ensure files can be overwritten if you get the command wrong and nee do re-run. You can see why I suggested copying it into Notepad.

We now have our command, so let’s execute the copy. Fire up a DOS prompt, and go to the directory containing our AzCopy. Then, simply copy/paste in the command we have created above.

Hopefully you’ll see your files have been copied, and at a decent throughput too! I can often exhaust my 1Gbps connection so it doesn’t appear to be highly throttled.

Prepare the Mapping File

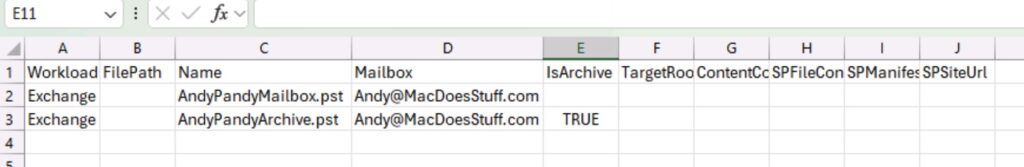

Next, we need a CSV file that maps the various PST files to the right mailboxes and the location in that mailbox. You can download my example for this demo from here:

You can see all the available options for the mapping file here:

Use network upload to import your organization’s PST files to Microsoft 365

NOTE: Case is important for both the PST file and the Email Address. That second one surprised me – I don’t remember that being the case. I now copy the email from the Admin centre (for one or two accounts) or grab via PowerShell.

Upload the Mapping File

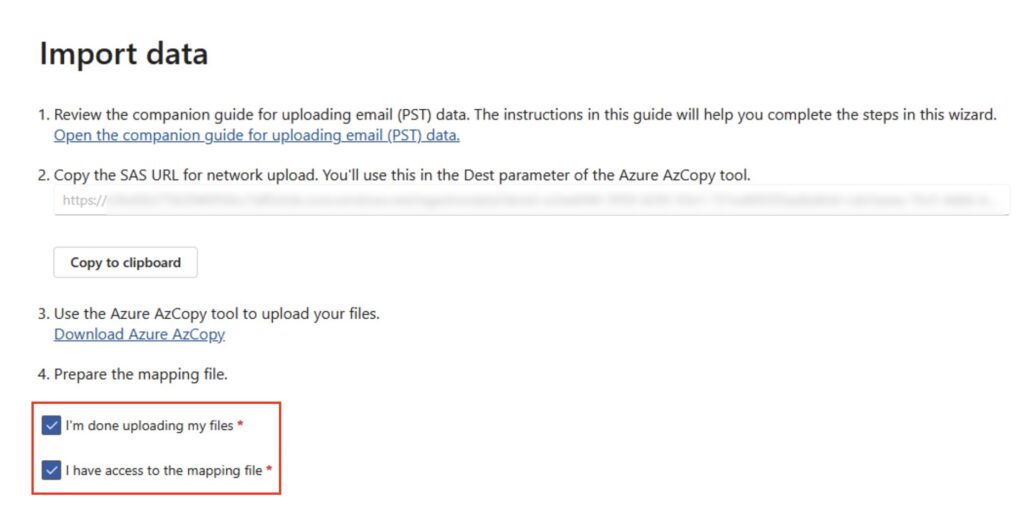

We have the mapping file, and we’re ready to continue. Select ‘I’m done uploading my files’ and ‘I have access to the mapping file’, followed by ‘Next’:

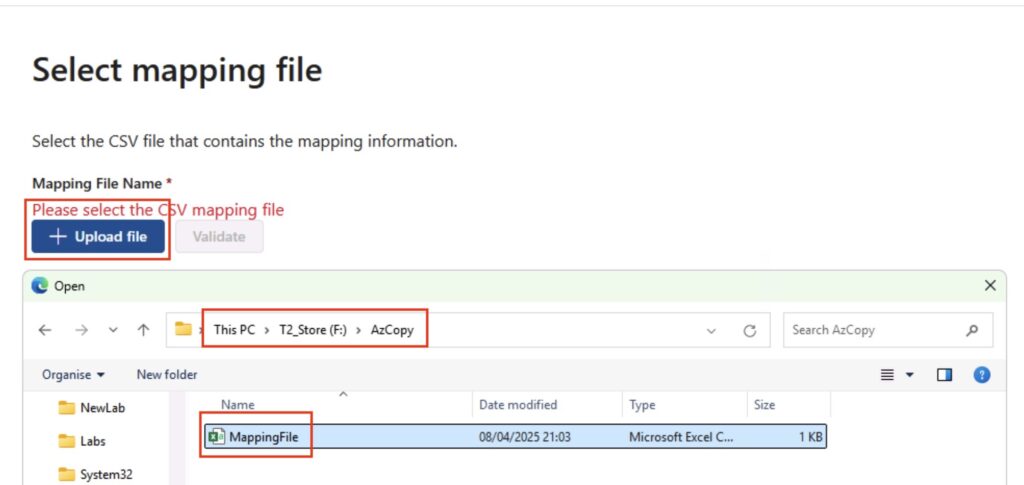

You will now be asked to upload your mapping file – hit that button, and select your mapping file:

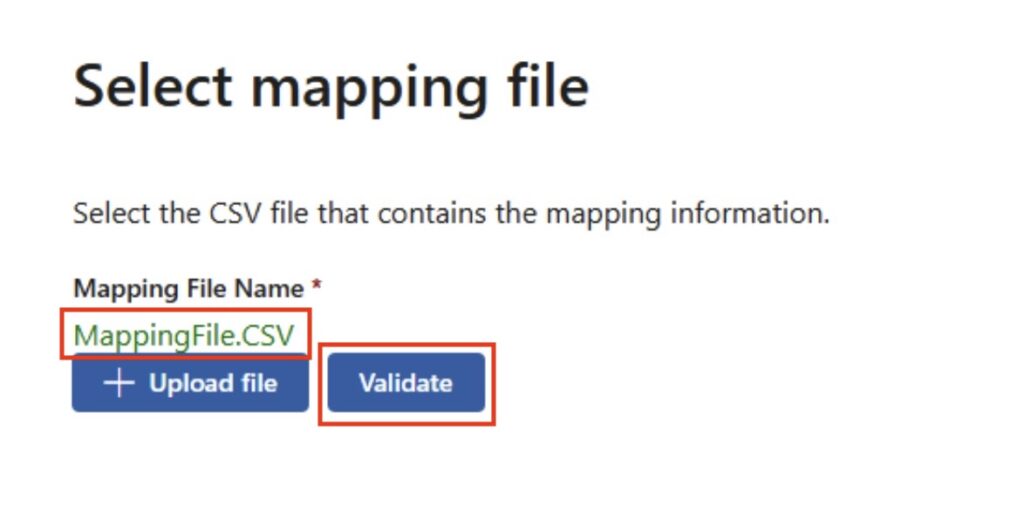

Once you have uploaded the mapping file, you will want to hit ‘Validate’ – it will check for common errors:

Hopefully, your mapping file will show up green as in the above example. The most common error I see is ‘The PST could not be found in the storage account’ – this is because you’ve uploaded the directory, not the individual PSTs! Refer back to including the ‘*’ in the source path. You can of course include the path in the mapping file however I find that in itself can be quite fussy – stick everything in the root and you’ll be fine.

Assuming you’re all good – click ‘Next’. You will now receive a summary/review of the import job you have just created – you can click ‘Next’ on this one too, assuming everything looks OK.

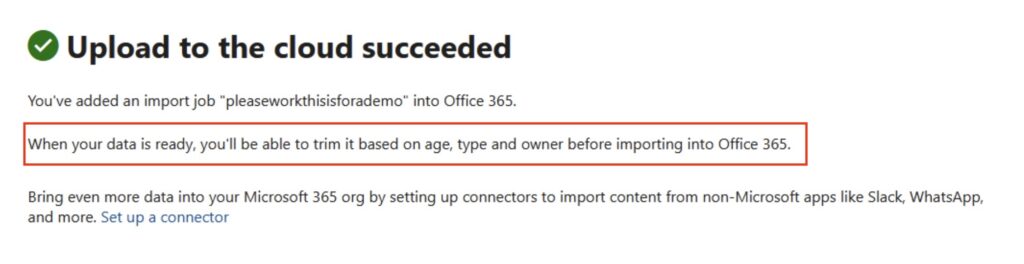

Click ‘Submit’ at the bottom. The Import Service will now examine the PST files – it has not yet started the importing!

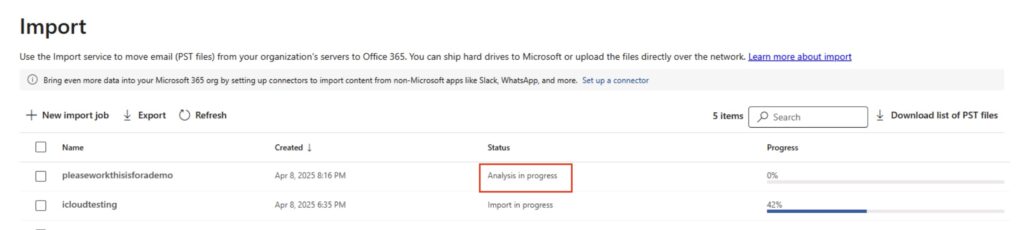

Click ‘Done’ and you will be taken back to the import screen – you will need to wait for the analysis phase of the import job to complete. Depending on the number of PST files and their size, this can take a little while.

Import Completed (Oh no it isn’t)

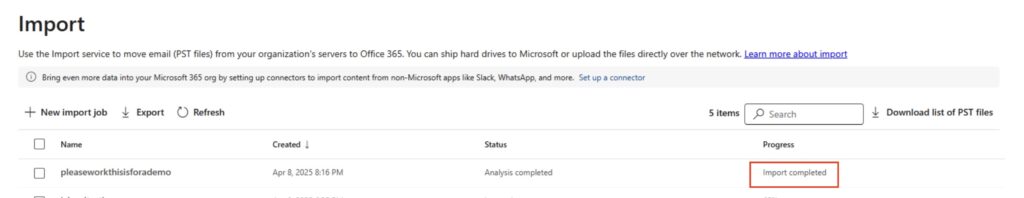

After a while, the import job will change to ‘Import Completed’ – don’t be fooled by this, the Import itself has NOT been done yet. Honest.

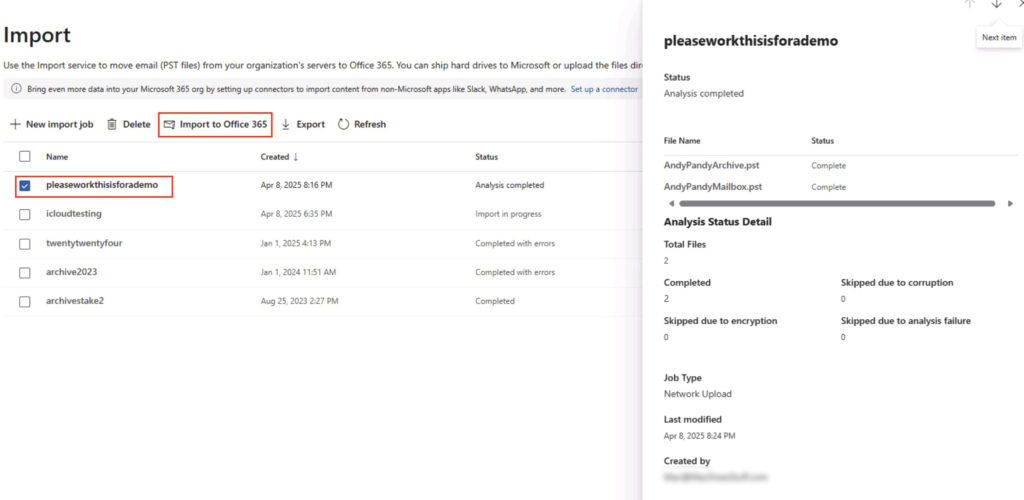

You want to select the job and make sure that the files have completed – none have been skipped etc. When you’re happy, hit that ‘Import to Office 365’ button. Interesting given it’s just said the import is done, right?

Filter Data

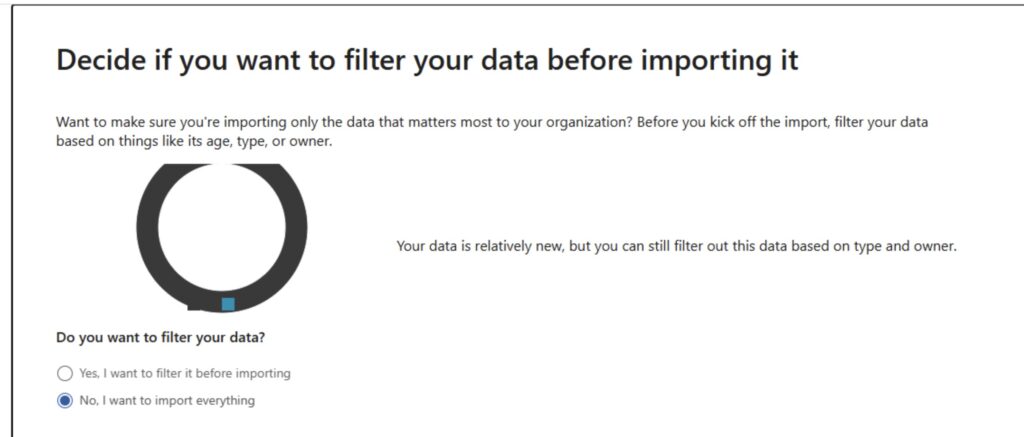

You can now, if you wish, filter the data. I’m not going to for the purpose of this demo however you can say for example to not import data over 2 years old.

Click ‘next’ after you have selected the right option for you. You’ll now be told what the import job is going to do.

See now the cool stuff can start. Hit ‘Submit’ and hopefully off it will go!

Hopefully, off it goes! Once the job is going properly, you can monitor its progress:

How Fast Is It?

I find it sometimes difficult to fully ascertain overall ingestion performance for Microsoft 365. Trying to keep up with the developing throttling situation is sometimes challenging. Saying that, you can carry out some reasonable guesswork.

I typically see about 20-30GB/day ingestion rates per mailbox, which is fairly decent.

Common Gotchas?

There’s the permission thing I mentioned at the beginning – it’s an irritant realising you need to do this only to realise you have to wait a while for the permissions to apply.

Message Sizing

There’s a 150MB message size limit in Exchange Online – you cannot therefore import messages that are larger than 150MB.

The Online Archive

The Online Archive initially is 50GB or 100GB, however it can auto-expand up to 1.5TB. There are several things to consider around auto-expanding archives however the most relevant one to this conversation is around ingestion rate – the fast ingestion rate only works up to the 50GB or 100GB limit (I.e., whatever the license limit is that you have). Beyond that, you’re relying on the auto-expanding process and that’s limited to circa 1GB/day. Also, in my experience, the Import Service fails when it hits that auto-expanding limit. I’d investigate other options if that’s your use case.

As a quick side-note, if you have complex migration requirements – multi-terabyte mailboxes, Symantec eVault migrations etc. – one of my favourite vendors is Cloudficient. They have some very cool technology to deal with these complex scenarios.

Summary

It seems a complex process when I write all this down – it really isn’t however. Like I say, I use this process a lot. Make sure you’re fussy on case etc. and you’ll find this a really useful tool.

Comments

One response to “The PST Import Service”

[…] is quick addenda to my previous post on the PST Import Service. Sometimes you can run in to an issue where the import service says it cannot find the PST files […]