Lync 2013 High Availability

25/03/15 13:03 Filed in: Lync

Some thoughts on the high availability options for Lync 2013 - specifically around geo-dispersion.

====

Updated article - originally published 14/6/2014.

====

Microsoft Lync 2013 has great support for high availability and for disaster recovery - they do however take some understanding. In particular these areas need some consideration:

- The number of servers in a pool - how many do you need? 3? Really?

- Geo-dispersion of pools - I.e the stretching of pools across data centres.

Both of these items are critical to designing a highly available solution. I guess it goes back to the idea that just because you can do something, it’s sometimes questionable as to whether you should.

The number of servers in a pool for the pool to be functional, and geo-dispersion, are both fairly tightly linked so it’s important to understand them. Firstly, consider the number of servers required for a pool to be available.

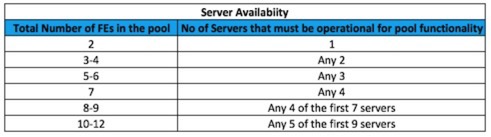

The above shows the minimum number of servers needed in a pool to prevent service startup failures.

In reality, the minimum number of servers is actually the minimum number of voters for quorum in the pool. With an even number of servers, the back-end SQL platform provides the dominant vote, with an odd number, the pool front-ends are able to achieve quorum.

The two pool scenario is a special case and isn’t generally recommended, although it is supported. You can read more about why that is, and other points in this article, here.

As a side note, the above also demonstrates why you should not add more servers to a Lync topology than you have ready for deployment. Plan on having 10 servers in your pool, but only 5 are ready? Don’t set up the other 5 until you’re ready to deploy - otherwise the pool will never reach quorum and will never start.

Now, why is this important when it comes to the idea of geo-dispersing a pool? I’ve seen some clients for example that have great network connections between their data centres, and instead of implementing two pools and using the in-built disaster recovery mechanisms, they decide to split the pool across two data centres. After all, as far as Lync is concerned it’s the same platform, right?

This was supported on Lync 2010 - it was known as the Lync Server 2010 Metropolitan Site Resiliency Model. In this model, front end servers in the same pool were separated between data centres. This is absolutely not supported on Lync 2013, due to the implementation of the Brick Model, and the decoupling of dependencies on the Back End database.

What if you ignore this supportability and geo-disperse your Lync 2013 pool? Well, this is a bad idea if you want to achieve any form of DR setup. If it’s just High Availability you want, then it’ll probably work. For DR though, it won’t. In a DR scenario for example you want to be able to lose one data centre with no interruption of service, right? Well, if you geo-disperse your pool you can’t guarantee this requirement will be met.

To understand why, you need to understand the brick model for user replication. In the Brick Model, the Windows Fabric replicates the user information to three front-end servers. The user always exists in three front ends - that is, the user is a member of routing group, and that routing group is replicated to three front-ends. One is the master, the other two are secondary. If one replica fails, another picks up the tasks for that user. After a while, if the failed replica doesn’t come online, a third one is regenerated on another server.

So why is this an issue? Well, you can’t control which servers contain your user replica sets. If you geo-disperse your pool, you could end up with the situation that the majority replica sets for your users are in one data centre - lose that data centre, and you lose service for those users. Note that you could end up with the situation where the pool thinks it’s functional - hey, it has enough servers - but the selection of users in that pool will never re-connect as their replica can never achieve quorum.

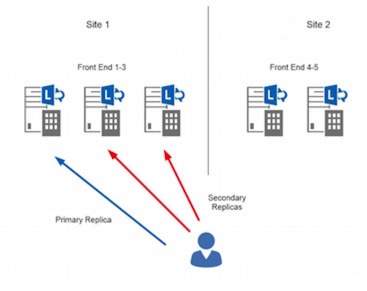

Let’s imagine the scenario then where we have a geo-dispersed pool with five front-ends like this - click on the image for a larger version:

We know that for a five server pool we need a minimum of 3 servers for the pool to be operational - so how could you lose Site 1? Also, you could end up with the situation with all of the user replicas being in one primary site - again, lose that site and you’ll not have service.

Even if you had the primary and a secondary replica in Site 2, and you lost Site 2, users in that replica set could not come back online as there would only be one replica in the primary site, and the replica set would never achieve quorum. If 2 replicas are lost, this results in quorum loss but users will be logged in with limited functionality mode (I.e. no contact list or presence etc).

You don’t have a DR capability do you, and arguably no real HA either.

Even if you had a four server pool for example - the SQL back-end would provide a vote in quorum, but you could still end up with the scenario where two of the user replicas are located in your failed data centre, and that replica will therefore never achieve quorum.

When designing high availability and disaster recovery for Lync, you need to ensure you utilise the right application layer models for those services available from the platform itself. Trying to stretch the pools, or use VMotion/Virtual Machine Mobility doesn’t really work. You’ll end up with unpredictable results in a DR scenario - and the last thing you want to be doing in a DR scenario is running around wondering why things are not working the way you expected!

I often also think the Lync Standard Edition deployments are under-rated for providing Lync Services - you can provide proper DR fail-over between data centres without any messing about trying to geo-stretch pools and the like. If you’re concerned about voice high availability, you can auto-route calls to the secondary Standard Edition server anyway. It’s important to consider your real RTO and RPO requirements when designing a highly available/recoverable solution - same for any technology solution.

EDIT: Related article: In praise of Lync Standard Edition

blog comments powered by Disqus