Update 25/5/15

Where am I now? Well, to be honest, the device rarely leaves my drawer, even after a small positive for a while. I just don’t understand the form factor. It just seems like one big compromise. As somebody who can touch-type (properly), the keyboard is painfully inadequate. I’d rater take a small laptop with me.

I found I was carrying the SP3 and my iPad everywhere. What’s the point of that? So as it stands, I’m back to using a small laptop as my travel buddy. Far more capable, keyboard is better, and not so compromised. Ho hum. Let’s see what the SP4 brings.

Update 29/1/15.

So, original article below from the end of October 2014. Where am I today? Well, surprisingly, my report back is a positive one! I’ve still the frustrations with Evernote and it’s terrible font size, but then my brain pointed out when I really need it I can use Evernote in a Web browse, and it’s just fine. I’d love them to fix it.

I’ve got used to the unit, and it’s now more often than not my travel buddy! Even to the point I put some videos on it the other day to watch on the train…and my iPad stayed home. All the surprised.

I still run in to the challenges of my job requiring power, and then I have to resort to other kit, but the Surface Pro 3 has found a far stronger place in my work life than I ever expected.

Gone from 6/10 to an 8/10.

====

So, the Microsoft Surface Pro (3) – interesting concept. Not really a tablet or a laptop, but allegedly brilliant at both. I’ve got my hands on a rather shiny Surface Pro 3 – so I thought it would be an interesting journey to measure my usage over the week.

I’m going to try and use it as a replacement for my travel buddy – a 13” Macbook Pro Retina. Now, I get that some people who read my blog think I’m an Apple Fanboy. I honestly don’t think I am – I would say I’m a technology fan. I love stuff that’s cool, fun, and helps me get the job done. And is cool.

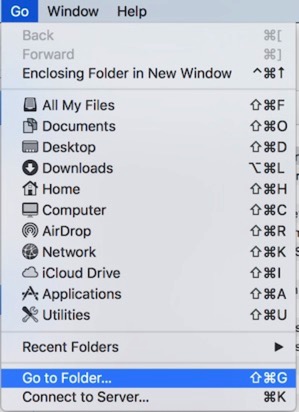

The last few years for me this has meant a Macbook Pro with Windows 8/8.1 running virtualised. Why? Well, I like Apple’s hardware, it fulfils the cool factor. And I’ve found the combination of the quality of the hardware with things like battery life really hit my technical cool spot. I like the power of the virtualisation capability of OS X – I can fire up anything very quickly, easily, and it just works. Now, before I get the hate-mail I know Windows laptops can do that too….but the oddity is that even though I have access to a wealth of laptops, phones, stuff etc. (for free, too, mostly), I always find myself gravitating to the Macbook Pro.

So, I plan on logging my journey into using a Microsoft Surface Pro 3 as my travel buddy. I will try to be objective, but of course personal preference will come in. I’ll of course welcome any feedback. Even from you – yes, you – you know who you are.

Day 1

Unpacking is an interesting experience. They’ve taken some lessons from Apple – trying to make you enjoy the unpacking. Did they achieve it? Yes, but with some caveats. Firstly, the battery for the pen, and the small stick-on pen holder thing…rapidly disappears across the floor. Detracts from the cool, but ultimately not important. It’s just not quite right – they could do better! Perfection should be pursued in everything, for perfection to be perceived in anything.

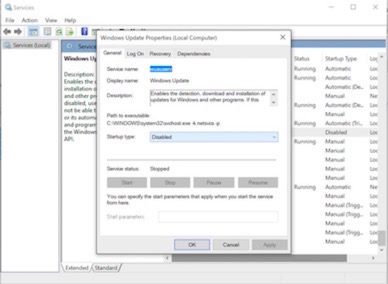

What next? Well, powering up the unit and getting going is no real hardship for anyone who powers up a new Windows laptop. Crap-ware free…apart from the 80+ Windows updates to be downloaded and installed, including one firmware update that reliably informed me that ‘that something had gone wrong’. Nearly two hours that took. Two. Hours. Two hours between taking the shiny out of the box and me being able to use it to appear funny and attractive to women on Twitter.

Then I started installing all the software I use – of course it takes a while. Takes a while on OS X too, that and updates of course. I hate Windows Update. Hate it. With a passion.

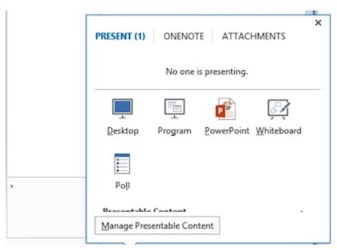

So. The Pen. Works really well. I’ll say it functions better in OneNote than the EverNote pen does on the iPad. On the other hand, OneNote is the only app I could really find it worked well in at all. Oh, wow, except for maybe paint.

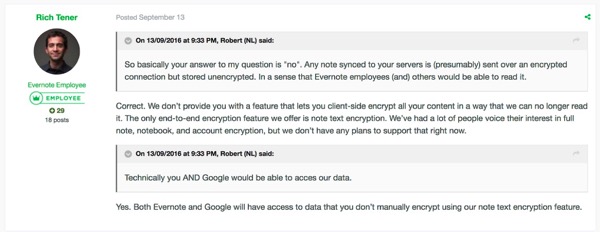

This led me to my first twitch. Evernote. I am a big Evernote fan. It’s different to OneNote – Evernote is more like an electronic scrapbook – I put everything in it. Using it on the Surface Pro 3 has been a challenge, but to be clear it’s the inadequacy of the software here, not the platform. The Windows EverNote client is utter tripe compared to the OS X one, so I instantly hit a usability barrier. No Pen Support. Text is TINY compared to OS X – have to expand all the notes. Evernote Touch – well, the less said about that unstable, buggy, terrible POS the better.

Anyway, the Pen. Works brilliantly in OneNote. Can’t really find anything else worth talking about. Oh, wow, apart from the ‘where do you keep it’ conundrum. You get a stick on pad that you can stick on the keyboard to hold it (Steve Jobs would never have allowed it. Oh. Wait.). Personally I find slotting the pen on to the closed keyboard cover far more convenient. Just feels unfinished.

What about everything else? Well, I do find the weird mix between the touch environment and the desktop setup a bit weird – but I’m willing to embrace it. So I set all my email accounts up in both the normal Outlook 2013, and the non-Metro Mail client. I.e. use the not-Metro mail client for touch, normal Outlook for everything else.

I’ve also got 1Password, EverNote (touch and desktop), all set up and ready to go. Also have my OneDrive (for Business and personal) all sync’ed up and working. That wasn’t that hard…as you’d expect.

So…end of day 1 – where am I at? Well, let’s summarise, in bullets:

- Ok, we can do this.

- Wait, touching the screen for desktop apps is really fiddly.

- Using the pen for desktop apps is clumsy.

- Oh. Wait. Have a Bluetooth mouse somewhere – Microsoft Wedge – whoop.

- Daughter: How do I play my videos? What the hell? Plugs in phone, shows phone drive, nothing else. Boggle.

- Keyboard: Better than I expected! Expected almost spongy interim iPad type keyboard, actually feels just as good as my MBP keyboard.

- Setup: Ye gods, how many updates.

- Wait, Ethernet adapter looks like a 5 quid eBay job.

- Keep touching screen at inappropriate times – desktop apps – getting frustrated and grabbing pen, then resorting to mouse.

- Performance is ace – to the point that I’m not even sure which one it is I’m using.

- Hate EverNote on Windows.

- People use this as a tablet without the keyboard? What?

- Wait, even more what, you don’t get a keyboard with this?

- Screen – seems an odd resolution/shape? Looks ace though, as good as my iPad, maybe not quite the quality of my Retina MBP, but not enough to be readily noticeable.

So, end of Day 1, it’s setup, it’s working…So let’s see what happens. I shall keep you updated.

Actually, before I stop for day 1, interesting that I haven’t touched on the specs? Almost the same way I wouldn’t consider the specs of an iPad – it works, or it doesn’t. I think that means this works? Anyway, more anon.

Edited to add an obvious point – I haven’t paid for this device, I’m not even sure of its price point. I shall investigate further and include opinions on that element.

Day 2

Ok, firstly, using this thing on your lap is a PITA. I’ve fixed it – using a tray*. So tricky using on the lap. It’s uncomfortable and tiring to try and do productive work with it on your lap.

*NSFW comedy language on that link. Quite possibly one of the funniest videos on YouTube though. Honest.

So today is the first day I’ve tried to use this in anger…and I have to confess I failed and went back to using my MBP. I’ve been editing a lot of stuff today – screenshots, taking bits out of PDFs etc. – and quite frankly I was getting incredibly frustrated doing it on the SP3. To be fair I think it’s because the small Wedge mouse being tiny and not as usable as my main mouse. Now, on that front however I was quite happy editing all the stuff I ended up doing using the touchpad on my MBP? Couldn’t do it on the SP3 without giving myself the rage of frustration.

So, not a massively successful day with it truth be told. I will keep trying however, I want to believe.

In evening I was also attempting to use it just as a tablet – for looking stuff up and consuming. Quite big to do that with, and a little clumsy truth be told. Totally possible however. Of course I miss things like being able to stream stuff to my sound system or my TV – all things that my (Insert Any Apple Device Here) takes in its stride.

Day 3

Today I’ve spent the morning just using the SP3 – forcing myself too as I don’t have my MBP with me. Brave. How am I finding it? Well:

- On a table it works like a laptop – who knew! A good one – fast, easy to use, getting more use to the touch screen. One thing I have noticed is that I’m one of those fortunate people who is practically ambidextrous so being able to use the mouse with one hand while randomly interacting with the screen on the other has made it far quicker to use than I initially realised.

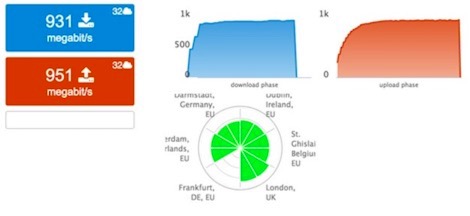

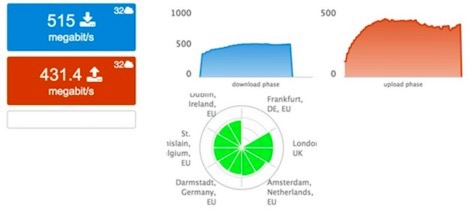

- It’s fast. Did I mention that? Not a massive spec this one either – i5 with 4Gb. It’s perfectly quick though to the point that I hadn’t really checked out the technical specifications.

- Still not convinced by the ‘tablet’ element of it. As a touch screen laptop though – it’s a good one – especially combined with the Pen.

The pen is interesting – found myself doing something earlier that surprised me. Sat on a conference call using the tablet part only, and the pen, to take notes in OneNotes. Notes I immediately emailed to Evernote of course.

I think what’s becoming clear to me is that this is a great machine – fast, capable, and good to use…but I think I’ve become very aware of how dependent you are on the apps you use as your daily work-flow. These are often more important than the form factor of the device you’re using aren’t they? For example like I’ve said repeatedly above, I’m a very heavy Evernote user – I use it for everything. The Evernote client on Windows 8 is a challenge, whether you use the touch or the desktop app. I suppose if I were to persevere with the platform the correct thing would be to migrate to OneNote – while I get that OneNote is a great note taking app, it’s not good at what I also use Evernote for which is as an electronic scrapbook for everything that appears on all my devices. I’d not only be looking at changing device then I’d also be looking at changing work-flow.

It’s amazing how important apps are. I’ve written before about how the only reason I keep with the phone I’m using is because of my investment in the apps on it.

How do I feel about this replacing my laptop and my iPad? Well, I think it can replace my iPad yes – except for when I’m out and about and just want to watch videos or read e-Magazines. It’s too big and bulky for that. It can though become my work travel buddy I think – one I take to meetings and work out on the road on.

Can it replace my MBP and my iPad? No, I don’t think it again. To be clear though I’m not sure that’s down to the form factor or the device itself – it’s down to the way I like to work, and the apps I like to use. I think that makes some of my more complex stuff just harder to produce on the Surface than it is on my Windows equipped MBP? Incredibly subjective that one!

I’m still utterly undecided.

Day 4 (Ok, not counting the weekend)

I can confirm things are getting better! I actually used it as a tablet at the weekend to arrange some flights and some hotels – it was surprisingly workable. Perhaps I’m getting use to it?

As a laptop replacement I’m also forcing myself to use it – and I’m slowly starting to get it. Like I point out above however, I think the apps you are use to, and how you work have a real bearing on whether a device like this will work for you.

Right now I’m feeling that it can as my travel buddy, but perhaps not as my main weapon of content production choice. Maybe that will change? I am putting the effort in, honest!

I’ve discovered the MicroSD card slot for example, and now have Bitlocker encrypted File History setup and configured….and I like that. It is worlds apart from the ease of use of TimeMachine however on OS X. I had to dig around and find it for a start.

The touch screen element is becoming more part of my general working as well, and again, I like that.

Any issues? Well…..

I can’t see me taking this out when I’m out for the day doing random (work/non-work) things. Say for media consumption for example. It’s too big. I’d take my iPad Air.

If I were in the office all day working on a complex design document, I’d probably take my proper laptop. Saying that, my decision is closer than it was – I reckon if I found myself working on such a document and only had the Surface Pro 3 available I wouldn’t be massively overwhelmed.

I guess where I’m going with this is that I’m struggling to find a place for the SP3. It’s not a replacement for my very powerful laptop (perhaps due to my usage type), and yet it’s a little too heavy to be a general travel ‘consumption’ device. What I can see myself using it for though is a replacement of my travel buddy for meetings and the like – it works well. It’s a great combination of light, powerful and comfortable form factor. Well, unless you want to use it on your lap, then it’s a PITA.

Day 5, 6 and 7

Ok I got ruthlessly distracted by the real world and the Microsoft Decoded event. Where am I at? Well, I like it more than I did on days 1 thru 3 I can tell you that much. I do actually use it in anger now, and I found myself taking notes at the show with just the pen, straight in to OneNote. I was then of course later emailing those notes directly to EverNote where I keep everything else, but hey, it’s starting to work as a thing.

Did have a bit of SNAFU earlier in the week when getting ruthlessly laughed at by somebody realising I was using my Macbook Pro as a tray so I could use the SP3 on my lap…..

I stated earlier that I’m struggling to find a place for it – and I think that’s still true, but the statement needs some further qualification. If I had my ‘main’ work machine – whether it laptop, desktop, or whatever – then the Surface Pro 3 could absolutely be my only mobile device for work. Bizarrely though, if I were off out on the Tube (like I am in a bit), it would be my iPad Air that would come with me…for media consumption and web browsing on the go the Surface Pro 3 just cannot compete. It’s too big and clumsy.

Could my iPhone 6 replace the iPad for media consumption? Well, here’s another little bizarre snippet. When the iPhone 6/6+ came out, I got a 6+…and I hated the size. Just didn’t get it. So I swapped it for a 6. Now, the 6 came with 128Gb of Storage, and that storage combined with the larger screen has changed how I use the unit – all of a sudden using it actively for EverNote (rather than just for reference) has become a reality…and guess what, I’m wishing I’d have stuck with the 6+! I’m certain that if I’d have kept the 6+, then that would be my travel consumption device of choice.

Complicated isn’t it? Of course my situation is further complicated by the fact that I have access to such a wide range of devices, consisting of a simply spectacular 13” Retina Macbook Pro (I will say that I think is the best laptop I’ve ever used…by a country mile), iPads, phones and some pretty powerful but less mobile kit. It’s because of this choice I think that I’m struggling to find a complete ‘space’ for the Surface Pro 3?

So, let’s try and simplify it.

If I had a Surface Pro 3 as a travel/mobile device, and a more powerful work unit (whether at home or at work), I think it would be a great solution. It would feel a bit compromised in that I think I’d need some form of lighter media/web consumption device – perhaps a larger phone.

Could it totally replace my travel & work laptops? Not a chance – for me anyway – I suspect my compute demands may be just too high.

My current perfect working environment?

iPad Air – personal media/web consumption

Surface Pro 3 – general travel and presenting type stuff.

MB Retina 13” – ‘proper’ work away from base, so VMs, writing, productivity.

Work Base – Multiple machines, from a 17” MBP to a Mac Pro.

This is a fantastically flexible environment…but then…look at the price for all those things! The SP3, a tablet that can replace your laptop? Nah.

I get this is a confusing piece of writing – but I think that tells a story in its own right doesn’t it?