When is plain old storage not plain old storage? When it’s Network Attached Storage (NAS) that’s when.

I don’t tend to delete stuff, as storage is relatively cheap and I usually find that if I delete something I, at some point in the near future, will be irritated that I’d deleted said thing. I have my email archives going back to 1999 for example. Yes, yes I know.

I’ve always shied away from network attached storage. Every time I’ve looked at it I’ve been caught by the network transfer rate bottleneck and the fact that locally attached storage has for the most part been a lot quicker. Most of my kit is SSD driven, and volume storage was fast Thunderbolt type. Typically I’d have a tiered storage approach:

- Fast SSD for OS/Apps/normal day to day stuff.

- Thunderbolt 3 connected SSD to my virtualisation stuff.

- Spinney ‘volume’ storage.

The thing is, my storage was getting into a mess. I had loads of stuff connected. About 12Tb off the back of my iMac#1 (My virtualisation machine, for stuff that’s running all the time), and about another 15Tb off the back of my everyday iMac Pro. That’s a lot of spinner stuff. Trying to ensure important stuff was backed up was becoming more and more of a headache.

So, I finally gave in, mainly due to the rage around cabling more than anything else, so I started investigating a small server type setup, but then it occurred to me I’d just be moving things about a bit, and I’d still have a ton of ad-hoc storage….So I started investigating the Network Attached Storage devices from the likes of Synology and QNAP.

Oh my, how wrong was I about NAS units. They’re so capable it’s ridiculous, and they’re not just raw storage. I have a few of them now, and they’re doing things like:

- Storage because storage because I needed storage

- A couple of virtual machines that run some specific scripts that I use constantly.

- Some SFTP sites.

- A VPN host.

- Plex for home media.

- Volume snapshots for my day to day work areas.

- Cloud-Sync with my DropBox/OneDrive accounts.

- Backup to another unit.

- Backup to another remote unit over the Internet (this is more of a replica for stuff I use elsewhere really).

- Backup to a cloud service.

I did run in to some performance issues as you can’t transfer to/from them faster than the 1Gbps connection – which is effectively around 110MB/s (Megabytes per second) – so 9-10 seconds per Gigabyte. My issue was that I had other stuff trying to run over the 1Gbps link to my main switch, so if I started copying up large files over the single 1Gbps links from my laptops or iMac(s) then of course everything would slow down.

That was fairly simple to fix as the Synology units I purchased support link aggregation – so I setup a number of ports using LACP link aggregation (Effectively multiple 1Gbps links) and configured my main iMac machines with two 1Gbps link-aggregated ports. Now, I can copy up/from the Synology NAS units at 110MB/s and be running other network loads to other destinations, and not really experience any slow downs.

Just to be clear – as I think there’s some confusion out there on link aggregation – aggregating 2 x 1Gbps connections will not allow you transfer between two devices at speeds >1Gbps as it doesn’t really load balance. It doesn’t for example send 1 packet down 1 link, and the next packet down the next. What it does is works out which is the least busy link and *uses that link for the operation you’re working on*.

If I transfer to two targets however – like two different Synology NAS units with LACP – I can get circa 110MB/s to both of them. Imagine widening a motorway – it doesn’t increase the speed, but what it does do is allow you to send more cars down that road. (Ok, so that often kills the speed, and my analogy falls apart but I’m OK with that).

I can’t imagine going back to traditional local attached storage for volume storage now. I do still have my fast SSD units attached however they’re tiny, and don’t produce a ton of cabling requirements.

I regularly transfer 70-100Gb virtual machines up and down to these units, and even over 1Gbps this is proving to be acceptable. It’s not that far off locally attached spinning drives. It’s just taken about 15 minutes (I didn’t time it explicitly) to copy up an 80Gb virtual machine for example – that’s more than acceptable.

The units also encrypt data at rest if you want – why would you not want that? I encrypt everything just because I can. Key management can be a challenge if you want to power off the units or reboot them as the keys for the encryption must either be:

- Available locally on the NAS unit via a USB stick or similar so that the volumes can be auto-mounted.

- or you have to type in your encryption passphrase to mount the volumes manually.

It’s not really an issue as the units I have have been up for about 80 days now. It’s not like they’re rebooting every few days.

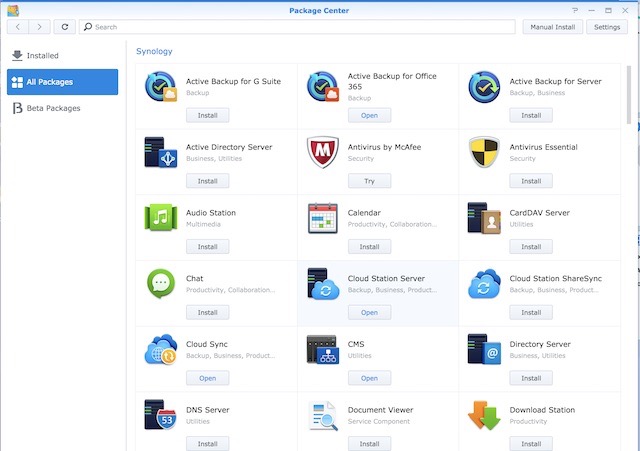

The Synology units have an App Store with all kinds of stuff in there – properly useful things too:

Anyway, I’m sure you can see where I’m going with this. These units are properly useful, and are certainly not just for storage – they are effectively small servers in their own right. I’ve upgraded the RAM in mine – some are easier to do than others – and also put some SSD read/write cache in my main unit. Have to say I wouldn’t bother with the SSD read/write cache as it’s not really made any difference to anything beyond some benchmarking boosts. I’d not know if they weren’t there.

I’m completely sold. Best tech purchase and restructure of the year. Also, I’m definitely not now eyeing up 10Gbps connectivity. Oh no.

As a quick side-note on the 1Gbps/10Gbps thing – does anyone else remember trying 1Gbps connectivity for the first time over say 100Mbps connectivity? I remember being blown away by it. Now, not so much. Even my Internet connection is 1Gbps. 10Gbps here I come. Probably.